Data Processing

- Data integrity: The accuracy, completeness, consistency, and trustworthiness of data throughout its lifecycle

- Data transfer: The process of copying data from a storage device to memory, or from one computer to another.

- Data manipulation: The process of changing data to make it more organized and easier to read

- Some threats to data integrity:

- Human error

- Viruses

- Malware

- Hacking

- System failures

| Data Constrain | Definition | Examples |

|---|---|---|

| Data type | Values must be of a certain type: date, number, percentage, Boolean, etc. | If the data type is a date, a single number like 30 would fail the constrain and be invalid |

| Data range | Values must fall between predefined maximum and minimum values | If the data range is 10-20, a value of 30 would fail the constrain and be invalid |

| Mandatory | Values can't be left blank of empty | If age is mandatory, that value must be filled in |

| Unique | Values can't have a duplicate | Two people can't have the same mobile phone number within the same service area |

| Regular expression (regex) patterns | Values must patch a prescribed pattern | A phone number must match ###-###-#### (no other characters allowed) |

| Cross-field validations | Certain conditions for multiple fields must be satisfied | Values are percentages and values from multiple fields must add up to 100% |

| Primary-key | (Databases only) Values must be unique per column | |

| Set-membership | (Databases only) Values for a column must come from a set of discrete values. | Value for a column must be set to Yes, No, or Not Applicable |

| Foreign-key | Values for a column must be unique values coming from a column in another table | |

| Accuracy | The degree to which the data conforms to the actual entity being measured or described | If value for zip codes are validated by street location, the accuracy of the data goes up |

| Completeness | The degree to which the data contains all desired components or measures | If data for personal profiles required hair and eye color, and both are collected, the data is complete |

| Consistency | The degree to which the data is repeatable from different points of entry or collection | If a customer has the same address in the sale and repair database, the data is consistent. |

- Using

VLOOKUPto find your needed value:=VLOOKUP('identity','range', the # of column', 'FALSE' - Using

DATEDIF(start_date, end_date,unit):start_date: the date represents the first dayend_date: the date represents the last day

| Unit | Reurns |

|---|---|

| Y | The number of complete years in the period |

| M | The number of complete months in the period |

| D | The number of days in the period |

| YM | The difference between the months in start_date and end_date. The days and years of the dates are ignored |

| YD | The difference between the days of start_date and end_date. The years of the dates are ignored. |

-

Type of insufficient data:

- Data from only one source

- Data that keeps updating

- Outdated data

- Geographically-limited data

-

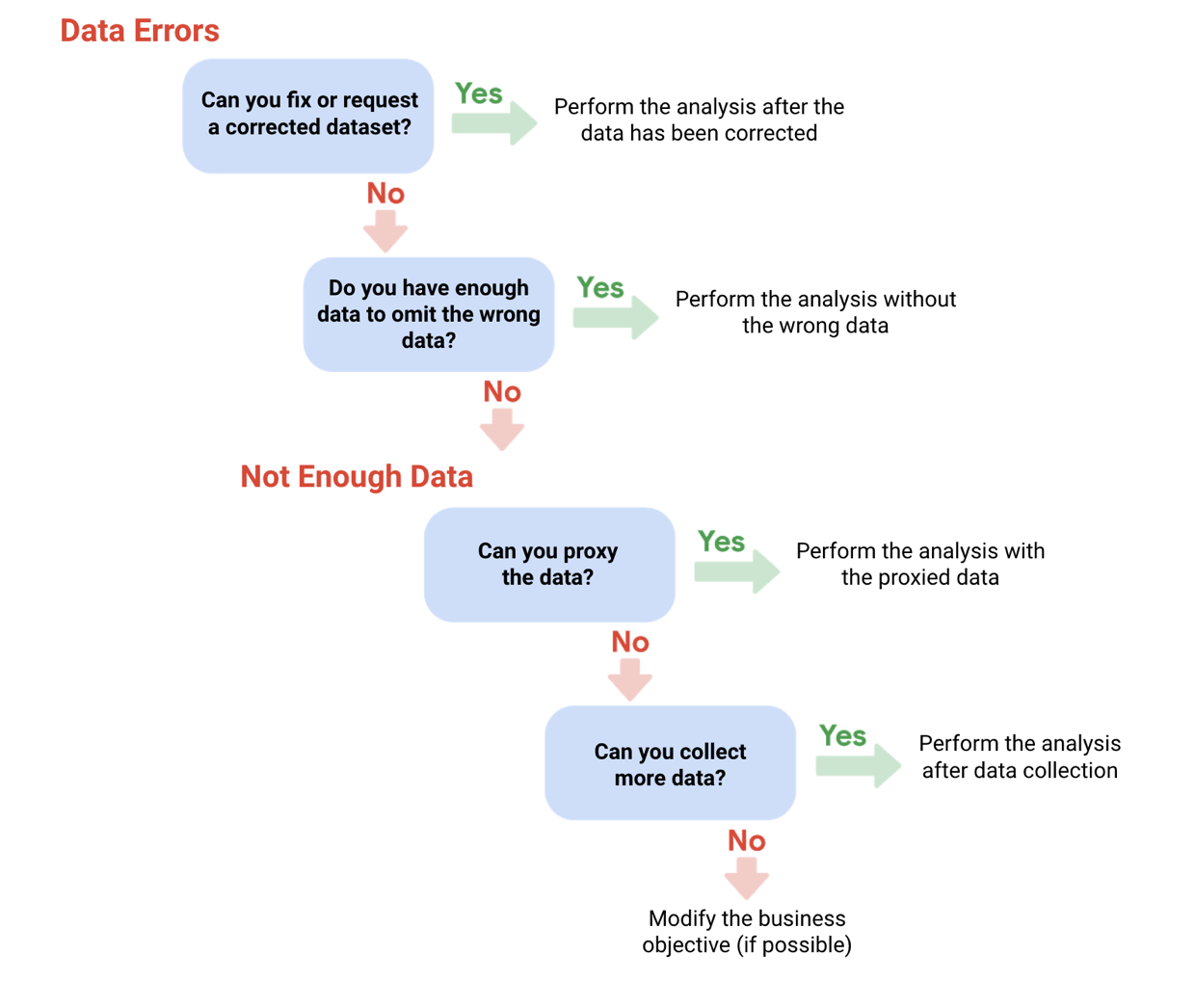

Data issue 1: No data

| Possible Solutions | Examples of solutions in real life |

|---|---|

| Gather the data on a small scale to perform a preliminary analysis and then request additional time to complete the analyis after you have collected more data. | If you are surveying employees about the they think about a new performance and bonus plan, use a sample for a preliminary analysis. Then, ask for another 3 weeks to collect the data from all employees. |

| If there isn't time to collect data, perform the analysis using proxy data from other datasets (this is the most common workaround) |

If you are analyzing peak travel or commuters but don't have the data for a particular city, use the data from another city with a similar size and demographic |

- Data issue 2: Too little data

| Possible Solutions | Examples of solutions in real life |

|---|---|

| Do the analysis using proxy data along with actual data | If you are analyzing trends for owners of golden retrievers, make your dataset larger by including the data from owners of labradors |

| Adjust your analysis to align with the data you already have | If you are missing data for 18-to-24-year-olds, do the analysis but note the following limitation in your report: this conclusion applies to adults 25 years and older only |

- Data issue 3: Wrong data, including data with errors *

| Possible Solutions | Examples of solutions in real life |

|---|---|

| If you have the wrong data because requirements were misunderstood, communicate the requirements again | If you need the data for female voters and received the data for male voters, restate your needs |

| Identify errors in the data and, if possible, correct them at the source by looking for a pattern in the errors | If your data is in a spreadsheet and there is a conditional statement or boolean causing calculations to be wrong, change the conditional statement instead of just fixing the calculated values |

| If you can't correct data errors yourself, you can ignore the wrong data and go ahead with the analysis if your sample size is still large enough and ignoring the data won't cause systematic bias | if your dataset was translated from a different language and some of the translations don't make sense, ignore the data with bad translation and go ahead with the analysis of the other data. |

Important note: Sometimes data with errors can be a warning sign that the data isn't reliable. Use your best judgement |

CALCULATE SAMPLE SIZE

| Terminology | Definitions |

|---|---|

| Population | The entire group that you are interested in for your study. For example, if you are surveying people in your company, the population would be all the employees in your company. |

| Sample | A subset of your population. Just like a food sample, it is called a sample because it is only a taste. So if your company is too large to survey every individual, you can survey a representative sample or your population |

| Margin of Error | Since a sample is used to represent a population, the sample's result are expected to differ from what the result would have been if you had surveyed the entire population. This difference is called the margin of error. The smaller the margin of error, the closer the results of the sample are to what the result would have been if you had surveyed the entire population. |

| Confidence level | How confident you are in the survey results. For example, a 95% confidence level means that if you were to run the same survey 100 times, you would get similar results 95 of those 100 times. Confidence level is targeted before you start your study because it will affect how big your margin of error is at the end of your study |

| Confidence interval | The range of possible values that the population's result would be at the confidence level of the study. This range is the sample result +/- the margin of error. |

| Statistical Power | Can be calculated and reported for a completed experiment to comment on the confidence one might have in the conclusions drawn from the results of the study, It can also be used as a tool to estimate the number of observations or sample size required in order to detect an effect in an experiment. |

| Statistical significance | The determination of whether your result could be due to random chance or not. The greater the significance, the less due to chance. |

-

Things to remember when determining the size of your sample:

- Don't use a sample size less than 30. It has be statistically proven that 30 is the smallest sample size where an average result of a sample starts to represent the average result of a population.

- The confidence level most commonly used is 95%, but 90% can work in some cases

- Increase the sample size to meet specific needs of your project:

- For a higher confidence level, use a larger sample size

- To decrease he margin of error, use a larger sample size

- For greater statistical significance, use a larger sample size

-

Why a minimum sample of 30?

This recommendations is based on the Central Limit Theorem(CLT) in the field of probability and statistics. As sample size increases, the result more closely resemble the normal (bell-shaped) distribution from a large number of samples. A sample of 30 is the smallest sample size for which the CLT is still valid. Researchers who rely on regression analysis - statistical methods to determine the relationships between controlled and dependent variables - also prefer a minimum sample of 30. Central Limit Theorem -

Sample sizes vary by business problem

- Sample size will vary based on the type of business problem you are trying to solve.

-

For example, if you live in a city with a population of 200,000 and get 180,000 people to respond to a survey, that is a large sample size. But without actually doing that, what would an acceptable, smaller sample size look like?

-

Would 200 be alright if the people surveyed represented every district in the city?

-

Answer: It depends on the stakes.

-

A sample size of 200 might be large enough if your business problem is to find out how residents felt about the new library

-

A sample size of 200 might not be large enough if your business problem is to determine how residents would vote to fund the library

-

You could probably accept a larger margin of error surveying how residents feel about the new library versus surveying residents about how they would vote to fund it. For that reason, you would most likely use a larger sample size for the voter survey.

WHEN DATA ISN'T READILY AVAILABLE

- Proxy data examples: Sometimes the data to support a business objective isn't readily available. This is when proxy data is useful.

| Business Scenario | How proxy data can be used |

|---|---|

| A new car model was just launched a few days ago and the auto dealership can't wait until the end of the month for sales data to come in. They want sales projections now. | The analyst proxies the number of clicks to the car specifications on the dealership's website as an estimate of potential sales at the dealership |

| A brand new plant-based meat product was only recently stocked in grocery stores and the supplier needs to estimate the demand over the next for years | The analyst proxies the sales data for a turkey substitute made out of tofu that has been on the market for several years. |

| The Chamber of Commerce wants to know how a tourism campaign is going to impact travel to their city, but the results from the campaign aren't publicly available yet. | The analyst proxies the historical data for airline bookings to the city one to three months after a similar campaign was run six months earlier. |

CLEANING DATA

-

Dirty data: Data that is incomplete, incorrect, or irrelevant to the problem you're trying to solve

-

Data engineer: Transform data into a useful format for analysis and give it a reliable infrastructure. This means they develop, maintain, and test databases, data processors and related systems.

-

Data warehousing specialists: Develop processes and procedures to effectively store and organize data

-

Types of dirty data:

- Duplicate data: Any data record that shows up more than once

- Reason: Manual entry, batch data imports, or data migration

- Outdated data: Any data that is old which should be replaced with newer and more accurate information

- Reason: People changing roles or companies, or software and systems becoming obsolete

- Incomplete data: Any data that is missing important fields

- Reason: Improper data collection or incorrect data entry

- Incorrect/inaccurate data: Any data that is complete but inaccurate

- Reason: Human error inserted during data input, fake information, or mock data

- Inconsistent data: Any data that uses different formats to represent the same thing

- Reason: Data stored incorrectly or errors inserted during data transfer

- Duplicate data: Any data record that shows up more than once

-

Common mistake to avoid

- Not checking for spelling errors: Misspellings can be as simple as typing or input errors. Most of the time the wrong spelling or common grammatical errors can be detected, but it gets harder with things like names or addresses. For example, if you are working with a spreadsheet table of customer data, you might come across a customer named "John" whose name has been input incorrectly as "Jon" in some places. The spreadsheet's spellcheck probably won't flag this, so if you don't double-check for spelling errors and catch this, your analysis will have mistake in it.

- Forgetting to document errors: Documenting your errors can be a big time saver, as it helps you avoid those errors in the future by showing how you resolved them. For example, you might find an error in a formula in your spreadsheet. You discover that some of the dates in one of your columns haven't been formatted correctly. If you make a note of this fix, you can reference it the next time your formula is broken, and get a head start on troubleshooting. Documenting your errors also helps you keep track of changes in your work, so that you can backtrack if a fix didn't work.

- Not checking for misfielded values: A misfielded value happens when the values are entered into the wrong field. These values might still be formatted correctly, which makes them harder to catch if you aren't careful. For example, you might have a dataset with columns for cities and countries. These are the same type of data, so they are easy to mix up. But if you were trying to find all of the instances of Spain in the country column, and Spain had mistakenly been entered into the city column, you would miss key data points. Making sure your data has been entered correctly is key to accurate, complete analysis

- Overlooking missing values: Missing values in your dataset can create errors and give you inaccurate conclusions. For example, if you were trying to get the total number sales from the last 3 months, but a week of transactions were missing, your calculations would be inaccurate. As a best practice, try to keep your data as clean as possible by maintaining completeness and consistency.

- Only looking at a subset of data: It is important to think about all of the relevant data when you are cleaning. This helps make sure you understand the whole story the data is telling, and that you are paying attention to all possible errors. For example, if you are working with data about bird migration patterns from different sources, but you only clean one source, you might not realize that some of the data is being repeated. This will cause problems in your analysis later on, If you want to avoid common errors like duplicates, each field of your data requires equal attention.

- Losing track of business objectives: When you are cleaning data, you might make new and interesting discoveries about your dataset - but you don't want these discoveries to distract you from the task at hand. For example, if you were working with weather data to find the average number of rainy days in your city, you might notice some interesting patterns about snowfall, too. That is really interesting, but it isn’t related to the question you are trying to answer right now.

- Not fixing the source of the error: Fixing the error itself is important. But if that error is actually a part of a bigger problem, you need to find the source of the issue. Otherwise, you will have to keep fixing that same error over and over again. For example, imagine you have a team spreadsheet that tracks everyone’s progress. The table keeps breaking because different people are entering different values. You can keep fixing all of these problems one by one, or you can set up your table to streamline data entry so everyone is on the same page. Addressing the source of the errors in your data will save you a lot of time in the long run.

- Not analyzing the system prior to data cleaning: If we want to clean our data and avoid future errors, we need to understand the root cause of your dirty data. First, you figure out where the errors come from. Maybe it is from a data entry error, not setting up a spell check, lack of formats, or from duplicates. Then, once you understand where bad data comes from, you can control it and keep your data clean.

- Not backing up your data prior to data cleaning: It is always good to be proactive and create your data backup before you start your data clean-up. If your program crashes, or if your changes cause a problem in your dataset, you can always go back to the saved version and restore it.

- Not accounting for data cleaning in your deadlines/process: All good things take time, and that includes data cleaning. It is important to keep that in mind when going through your process and looking at your deadlines. When you set aside time for data cleaning, it helps you get a more accurate estimate for ETAs for stakeholders, and can help you know when to request an adjusted ETA.

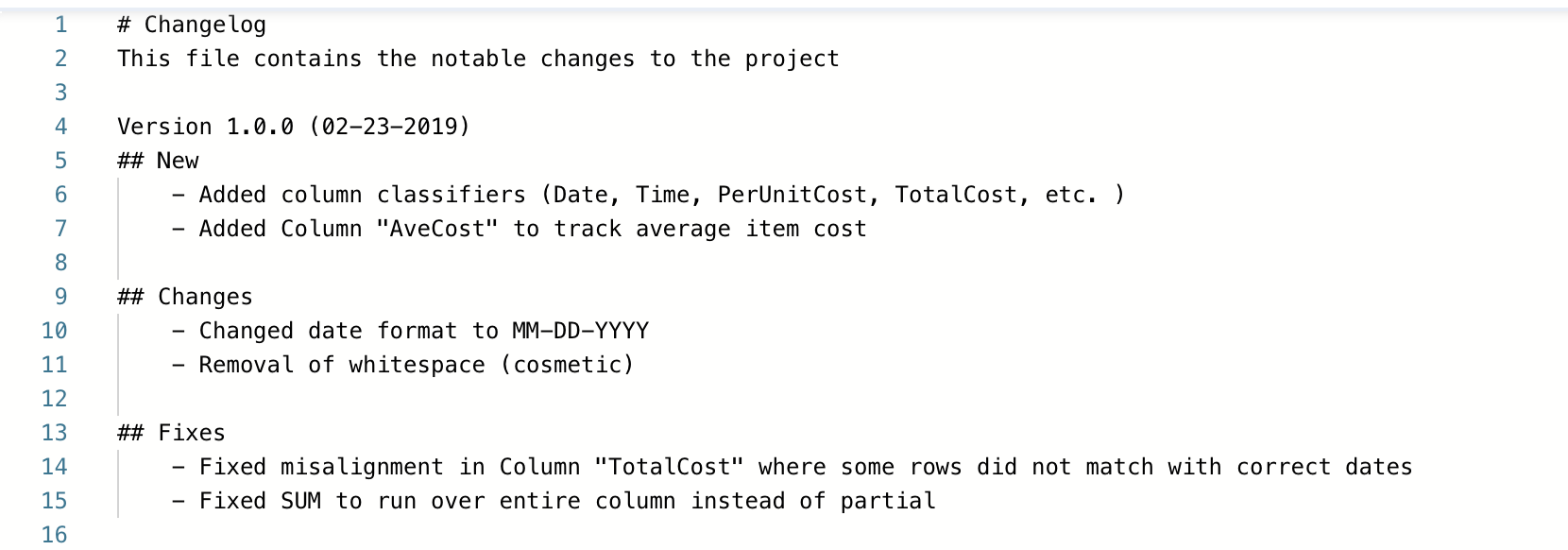

VERIFYING AND REPORTING

- Verification: A process to confirm that a data-cleaning effort was well-executed and the resulting data is accurate and reliable

- Change-log: A file containing a chronologically ordered list of modifications made to a project

- Common element of change-log:

- Data, file, formula, query, or any other component that changed

- Description of what changed

- Date of the change

- Person who made the change

- Person who approved the change

- Version number

- Reason for the change

- Common element of change-log:

- **Benefits of data documentation:

- Recover data-cleaning errors

- Inform other users of changes

- Determine quality of data